I vibecoded facepaint.lol: an AI photo editor using Gemini

How I built an AI photo‑editing app in a weekend using a new experimental image generation model.

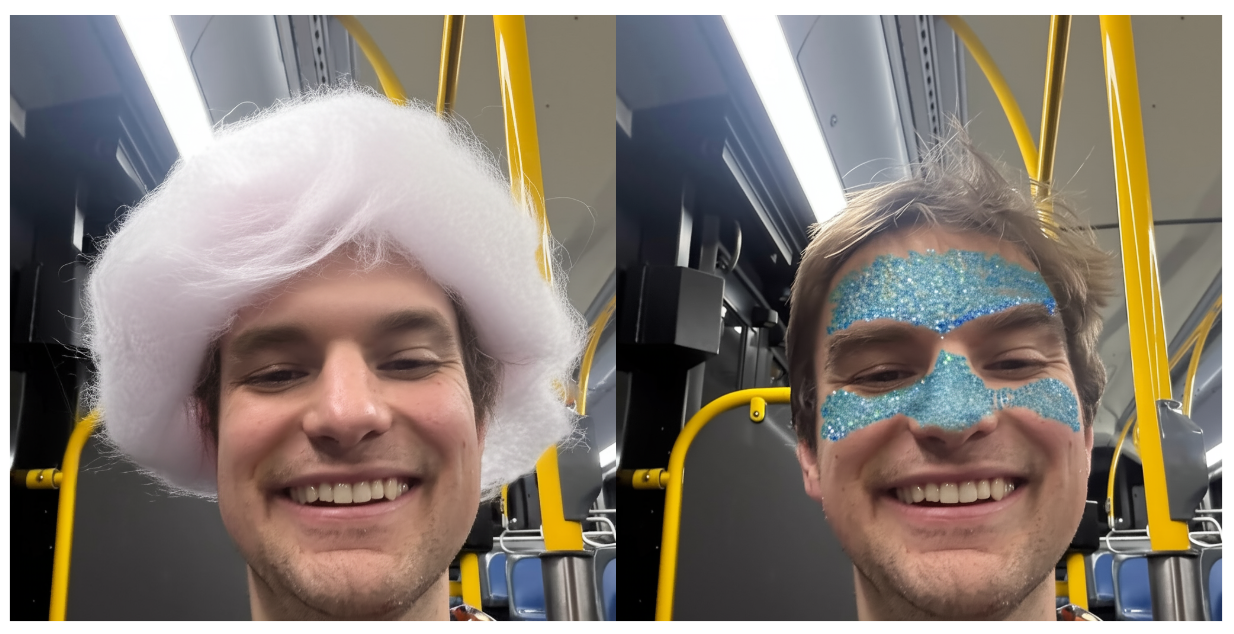

I recently built an AI photo-editing app in just a couple evenings of vibe coding with v0, Cursor, and Gemini’s 2.0 Flash Experimental model. You can check it out at facepaint.lol and see it in action here:

The AI Model

I noticed that everyone was talking about OpenAI's latest model generating Studio Ghibli-style imagery, but Google's new experimental image generation model is exceptional at modifying existing portraits.

What makes the model stand out is its ability to maintain details of existing images without adding artifacts—letting you easily add new hairstyles, facial features, or accessories to photos without specialized skills. I know this sounds simple but the best-in-class image generation models (including Google’s own Imagen 3 model) tend to perform poorly when making edits to an existing image.

Unlike some competitors' models, Gemini 2.0 Flash Experimental is accessible via API, so it was straightforward to build into a web app. I found the key examples I needed on the Google AI developer documentation.

Implementing the Site

Using these capabilities, here are some of the results:

Here's how it works:

- Upload a Portrait: Select a photo of yourself or a friend.

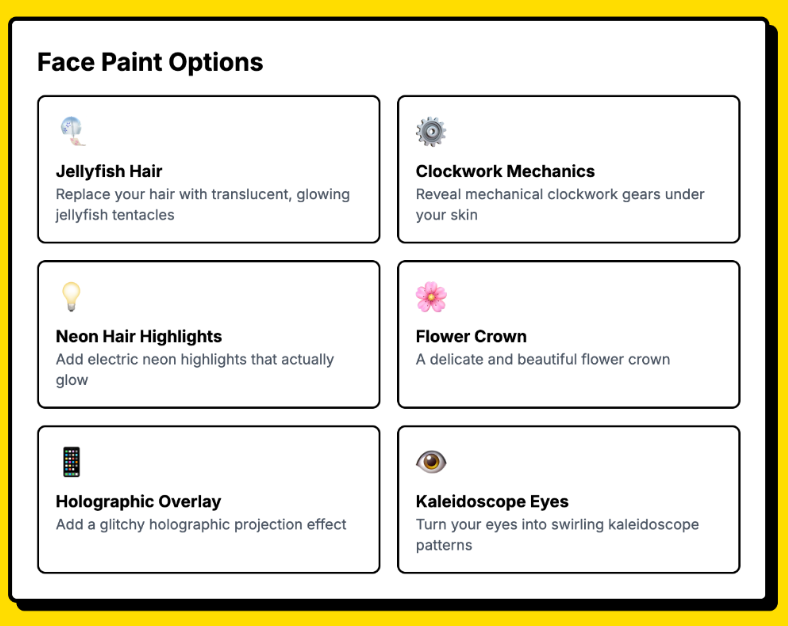

- Choose Your Facepaint: Options include cartoon bunny ears, retro 90s sunglasses, glitter facepaint, and many others.

- See the Results: Click your chosen option and wait a few seconds while Gemini processes your image.

The Tech Stack

The site's aesthetic is inspired by vintage 1990s pop culture imagery from Pinterest. I started the project using Vercel's v0, which took an image from Pinterest and created an expanded prompt to start the Next.js site.

For the tech stack, I used:

- Next.js with Tailwind CSS and shadcn UI components

- Vercel for deployment

- Vercel Blob for image storage

- The Gemini API for the image transformations

A key technical integration was figuring out how to properly use the Gemini API for image editing. After some trial and error with Google's documentation, I found success using prompts like "Add colorful musical notes and symbols floating around the person's head" while including the base64-encoded original image in the inlineData field.

User Experience Details

To make the experience more engaging while users wait for image processing, I added playful loading messages like this:

- "Wrangling rainbow-tailed unicorns for color inspiration... 🦄"

- "Consulting with time-traveling art critics from the future... 🔮"

- "Dipping brushes in liquid starlight and bottled daydreams... ✨"

The app automatically crops uploaded images to focus on the face, improving the accuracy of the model's edits. Generated images are stored in Vercel Blob so users can re-visit and re-edit previous creations.

Development Process and Lessons Learned

I built facepaint.lol over just a couple of evenings coding in Cursor. What helped me move quickly:

- Using Vercel's v0 to generate a solid start that I could export as a Zip file

- Next.js so I could get the app running with

pnpm installandpnpm run dev - Testing the AI prompts in the Gemini AI Sandbox

The biggest technical challenge was understanding how to integrate the new Gemini API for image modification rather than generation from scratch. I initially found examples from Google AI Studio, but the generated code didn't properly load an existing image. After exploring the documentation further, I found the right approach using the inlineData field.

What's Next

Future plans for facepaint.lol include:

- Making it easier to share on social media. I hacked together a share page but X doesn’t like the .lol domain 😔

- Adding more facepaint options and potentially giving the user more controls

- Testing other text-to-image models (e.g. when the new OpenAI model is available via API)

- Potentially exploring animation capabilities

Try it at facepaint.lol—I'd love to see what transformations you create!